Content moderation tools have evolved from simple spam filters to sophisticated AI systems that make millions of decisions daily about acceptable political speech, fundamentally altering the boundaries of democratic discourse online.

Technological Evolution

2009-2012: Basic Automated Filtering Early content moderation focused on spam, explicit content, and copyright violations using simple keyword filtering and user reporting systems.

2012-2016: Machine Learning Integration Platforms began using machine learning to detect harassment, hate speech, and other policy violations at scale, reducing reliance on human moderators.

2016-2020: Political Content Focus Election interference and misinformation campaigns led platforms to develop specialized tools for political content, including fact-checking integration and coordinated behavior detection.

2020-2022: Pandemic and Election Pressures COVID-19 misinformation and election disputes intensified pressure on platforms to remove false information, leading to more aggressive automated enforcement.

2022-Present: AI Sophistication Advanced natural language processing enables more nuanced understanding of context, sarcasm, and implicit meaning in political content.

Technical Capabilities

Modern content moderation systems employ various technologies:

Natural Language Processing AI systems analyze text content for policy violations, including hate speech, harassment, and misinformation, with increasing sophistication in understanding context and intent.

Image and Video Analysis Computer vision algorithms detect problematic visual content, including violent imagery, hate symbols, and manipulated media like deepfakes.

Behavioral Pattern Detection Systems identify coordinated inauthentic behavior, spam networks, and manipulation campaigns through analysis of posting patterns and account relationships.

Real-Time Processing Content moderation operates at massive scale, processing billions of posts daily with minimal human oversight for most decisions.

Cross-Platform Coordination Some systems share information about problematic content and actors across different platforms to prevent migration of harmful behavior.

Political Speech Challenges

Moderating political content presents unique difficulties:

Context Dependency Political speech often relies on cultural context, historical references, and implicit meaning that automated systems struggle to interpret accurately.

Satirical and Hyperbolic Language Political discourse frequently uses exaggeration, sarcasm, and provocative language that can be misinterpreted by automated systems.

Newsworthiness Exceptions Platforms struggle to balance removing harmful content with preserving politically newsworthy posts from public figures.

Cultural and Linguistic Variation Global platforms must account for different cultural norms and languages when moderating political content across diverse user bases.

Rapidly Evolving Narratives Political misinformation and manipulation tactics evolve quickly, requiring constant updates to detection systems.

Bias and Fairness Concerns

Automated moderation systems face significant bias challenges:

Training Data Bias Machine learning systems reflect biases present in their training data, potentially leading to discriminatory enforcement against certain political viewpoints.

False Positive Rates Overaggressive moderation can suppress legitimate political speech, particularly affecting marginalized communities and controversial but legal viewpoints.

Inconsistent Enforcement Automated systems may apply policies inconsistently, leading to perceptions of political bias or unfair treatment.

Transparency Deficits Platforms rarely provide detailed information about how their moderation algorithms work, making it difficult to identify and address bias.

Appeal Limitations High-volume automated moderation makes meaningful human review of contested decisions difficult, potentially suppressing legitimate political speech.

Democratic Implications

Content moderation tools raise fundamental questions about democratic discourse:

Private Platform Power Major platforms’ moderation decisions effectively set boundaries for political speech, concentrating significant power over democratic discourse in private companies.

Censorship vs. Safety Balancing free speech principles with protection from harmful content requires difficult judgments about the boundaries of acceptable political discourse.

Global vs. Local Standards International platforms must navigate different cultural and legal standards for political speech across multiple jurisdictions.

Transparency and Accountability Democratic oversight of moderation decisions is complicated by technical complexity and legitimate concerns about gaming by bad actors.

Innovation vs. Regulation Government regulation of content moderation could stifle technological innovation while inadequate oversight may undermine democratic values.

Regulatory Responses

Governments worldwide are developing policies for content moderation:

Transparency Requirements Some jurisdictions require platforms to publish detailed reports about their moderation practices and enforcement statistics.

Due Process Standards Regulations increasingly require platforms to provide meaningful appeal processes and human review for content removal decisions.

Illegal Content Mandates Laws in various countries require rapid removal of specific types of harmful content, though definitions vary significantly.

Algorithmic Auditing Proposed regulations would require platforms to audit their moderation algorithms for bias and provide access to researchers.

Section 230 Debates Ongoing discussions in the US about reforming the legal framework that enables platform content moderation continue without clear resolution.

Future Challenges

Content moderation continues evolving rapidly:

AI Arms Race As AI-generated content becomes more sophisticated, moderation systems must evolve to detect increasingly realistic fake content.

Decentralized Platforms New social media architectures may complicate traditional content moderation approaches and shift responsibility to users or smaller operators.

Real-Time Verification Increasing pressure for real-time fact-checking and content verification during breaking news events and political crises.

International Coordination Cross-border nature of online platforms requires international cooperation on content standards that remains politically challenging.

Content moderation tools represent one of the most consequential technological developments affecting political discourse, with decisions made by these systems potentially influencing election outcomes, policy debates, and the overall health of democratic society.

Related Entities

Filter Timeline

| Date | Event |

|---|---|

| Content Moderation Tools introduced Supporting |

Network Graph

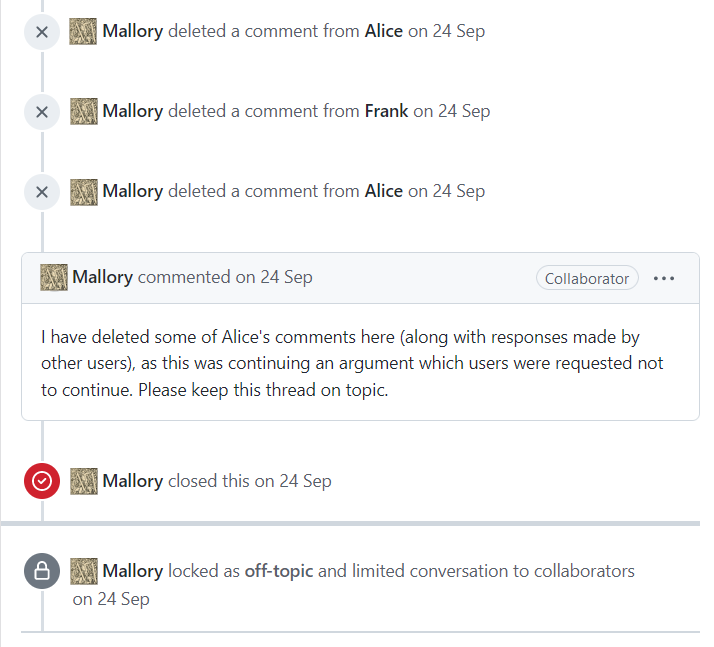

Network visualization showing Content Moderation Tools's connections and technological relationships.